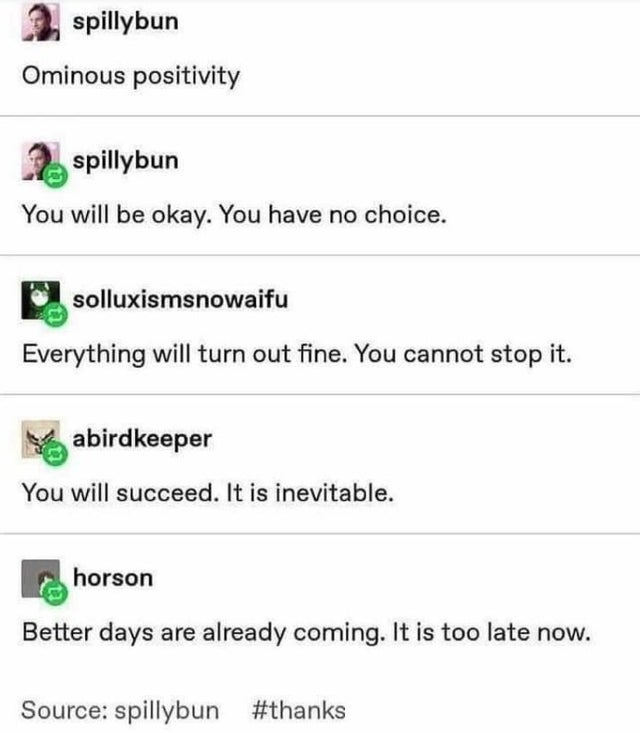

Good vibes have been sent your way. You cannot escape.

I'd rather be a forest than a street

A NEW CURE FOR AN OLD SCOURGE

Evidence of smallpox has been found in the scars of Ancient Egyptian mummies dating from 1,600 BC, and the disease certainly reached Ancient Greece before 400 BC. The first authoritative description of smallpox was by the great Persian physician Al-Razi, who wrote a treatise on the subject in AD 910. The symptoms began several days after exposure to the disease, with high fever, aching limbs, vomiting and delirium. Three days later a rash of red spots appeared, mainly on the face (and often the eyes), but also the mouth and other parts of the body. Within days these pots became blisters filled with pus. The disease was highly contagious and had a mortality rate varying from 20 per cent to 40 per cent, while those who survived suffered from gross disfigurement (the scabs from the blisters leaving pitted scars in the skin) and often blindness. It is estimated that between 1600 and 1800, as much as a third of the population of London had faces disfigured with smallpox scars and the disease accounted for two-thirds of those who were blind. It afflicted all levels of society. Queen Mary, who ruled Britain with her husband King William, died of smallpox in 1694. In 1713 the 23-year-old Lady Mary Montagu, who had scandalously eloped with a Whig politician and was widely regarded as the most beautiful and talented woman of her time, was struck down by the disease. As she recorded in a poem:

“In tears, surrounded by my friends I lay,

Mask’d o’er and trembling at the sight of day”

She survived, but was left with badly disfigured skin and no eyebrows. Despite this, she continued with her literary salon, impressing the poet Alexander Pope with her talents. Later, after she has spurned an amorous advance from the poet, he would satirize her in his poem The Dunciad. But Lady Montagu was sufficiently robust to weather such an immortal pinprick, and at the age of 50 eventually eloped once more – this time to the continent with a young Italian writer, who promptly abandoned her. She continued writing the poetry and essays which have deservedly established her in the feminist canon, and at the age of 57 she finally settled down with a 30-year-old Italian count on the shores of Lake Garda.

However, it is arguable that Lady Montagu’s greatest contribution lay neither in her literary talents, nor in the considerable qualities of her humanity. Three years after the 26-year-old beauty had been struck down with smallpox, she travelled to Constantinople with her husband, who had been appointed ambassador to the court of the Turkish sultan. Here she came across the practice of ‘ingrafting’, which the Turkish women used to protect themselves against smallpox. In a long letter to a friend in England she described how ‘the old woman comes with a nutshell full of matter of the best sort of smallpox, and asks what vein you please to have open… and puts into the vein as much venom as can lie upon the head of her needle.’ The ingrafting would be followed by a mild dose of smallpox, which left no disfigurement, and appeared to protect the subject against any further infection from the disease. Lady Montahu went on, ‘Every year thousands undergo this operation… and you may believe I am very well satisfied of the safety of this experiment, since I intend to try it on my dear little son.’

Lady Montagu was as good as her word, and the ingrafting of her son proved successful. When she returned to London she mounted a campaign to introduce the practice into England. The Latin word for smallpox is variola, and consequently this practice became known as variolation. Intent on publicity, in 1721 Lady Montagu invited three members of the Royal College of Physicians, along with several journalists, to witness the variolation of her daughter. News of the success of the operation quickly spread, and eventually the Prince of Wales, the future George II, was persuaded to have his two daughters variolated.

– A Brief History of Medicine from Hippocrates to Gene Therapy. Paul Strathern. Robinson. London, UK. (2005)

The just-world hypothesis or just-world fallacy is the cognitive bias that assumes that “people get what they deserve” – that actions will have morally fair and fitting consequences for the actor. For example, the assumptions that noble actions will eventually be rewarded and evil actions will eventually be punished fall under this hypothesis. In other words, the just-world hypothesis is the tendency to attribute consequences to—or expect consequences as the result of— either a universal force that restores moral balance or a universal connection between the nature of actions and their results. This belief generally implies the existence of cosmic justice, destiny, divine providence, desert, stability, and/or order. It is often associated with a variety of fundamental fallacies, especially in regard to rationalizing suffering on the grounds that the sufferers “deserve” it.

The hypothesis popularly appears in the English language in various figures of speech that imply guaranteed punishment for wrongdoing, such as: “you got what was coming to you“, “what goes around comes around“, “chickens come home to roost“, “everything happens for a reason”, and “you reap what you sow“. This hypothesis has been widely studied by social psychologists since Melvin J. Lerner conducted seminal work on the belief in a just world in the early 1960s.[1] Research has continued since then, examining the predictive capacity of the hypothesis in various situations and across cultures, and clarifying and expanding the theoretical understandings of just-world beliefs.[2]

In 1966, Lerner and his colleagues began a series of experiments that used shock paradigms to investigate observer responses to victimization. In the first of these experiments conducted at the University of Kansas, 72 female participants watched what appeared to be a confederate receiving electrical shocks under a variety of conditions. Initially, these observing participants were upset by the victim’s apparent suffering. But as the suffering continued and observers remained unable to intervene, the observers began to reject and devalue the victim. Rejection and devaluation of the victim was greater when the observed suffering was greater. But when participants were told the victim would receive compensation for her suffering, the participants did not derogate the victim.[6] Lerner and colleagues replicated these findings in subsequent studies, as did other researchers.[8]

To explain these studies’ findings, Lerner theorized that there was a prevalent belief in a just world. A just world is one in which actions and conditions have predictable, appropriate consequences. These actions and conditions are typically individuals’ behaviors or attributes. The specific conditions that correspond to certain consequences are socially determined by a society’s norms and ideologies. Lerner presents the belief in a just world as functional: it maintains the idea that one can influence the world in a predictable way. Belief in a just world functions as a sort of “contract” with the world regarding the consequences of behavior. This allows people to plan for the future and engage in effective, goal-driven behavior. Lerner summarized his findings and his theoretical work in his 1980 monograph The Belief in a Just World: A Fundamental Delusion.[7]

Lerner hypothesized that the belief in a just world is crucially important for people to maintain for their own well-being. But people are confronted daily with evidence that the world is not just: people suffer without apparent cause. Lerner explained that people use strategies to eliminate threats to their belief in a just world. These strategies can be rational or irrational. Rational strategies include accepting the reality of injustice, trying to prevent injustice or provide restitution, and accepting one’s own limitations. Non-rational strategies include denial, withdrawal, and reinterpretation of the event.[9]